Nel Dipartimento sono presenti competenze ampie e diversificate.

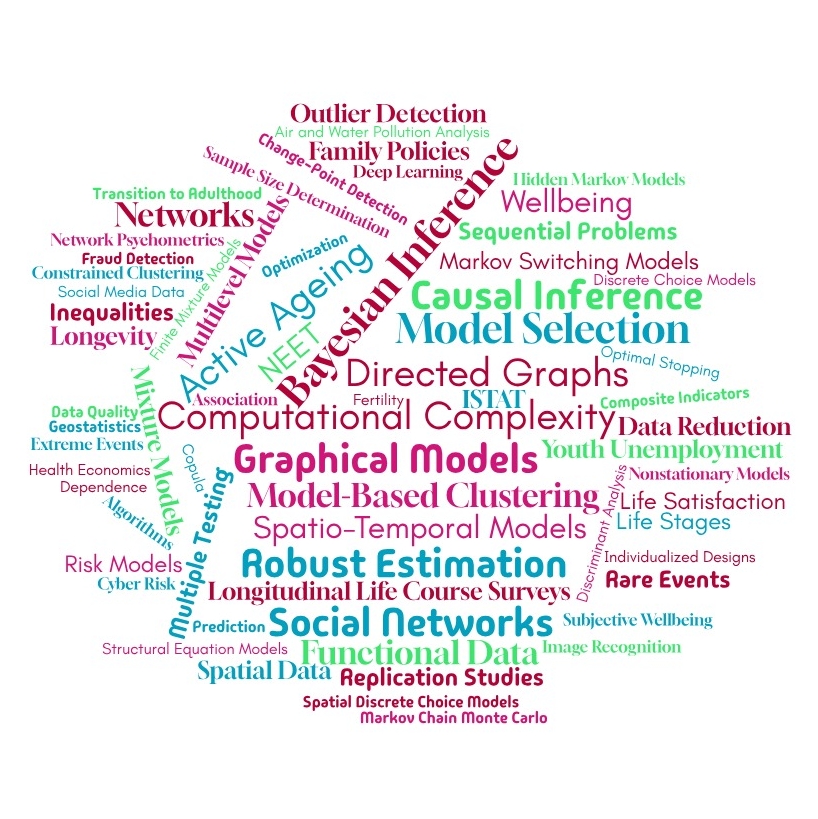

Per quanto riguarda Statistica: statistica bayesiana, modelli grafici e reti bayesiane, inferenza causale, analisi di dati funzionali, analisi multivariata, modelli di regressione, piani di campionamento, disegno degli esperimenti, dati spaziali e geostatistica, serie temporali e valori estremi, analisi sequenziale, clustering, ranking, modelli copula, modelli di rischio, dati testuali. Alcuni ambiti applicativi delle metodologie sviluppate sono: genetica e medicina personalizzata, finanza e assicurazioni.

Per quanto riguarda Statistica Economica: qualità dei dati, econometria spaziale, economia sanitaria, disuguaglianza economica, microeconomia.

Per quanto riguarda Demografia e Statistica Sociale: Fecondità, intenzioni, progettualità di vita, condizione giovanile e neet, invecchiamento e nuove fasi di vita, conciliazione famiglia-lavoro, impatto pandemia SARS-CoV2, reti personali e di supporto, social media data e disoccupazione giovanile, assetti familiari e relazionali, isolamento sociale, indicatori compositi con dati categoriali, Bayesian demography, validazione scale psicometriche, multidimensional Rasch model, modelli multilevel.

Per quanto riguarda Informatica: Complessità computazionale, Algoritmi di clustering bilanciato, Image Recognition, Deep learning.